‘Deepfaker’ is a new term used to alter actual footage to accommodate one’s desire to look good online or sound better than in person. Like Sora, a new video model of OpenAI—also the creators of ChatGPT—one can now generate his own AI video.

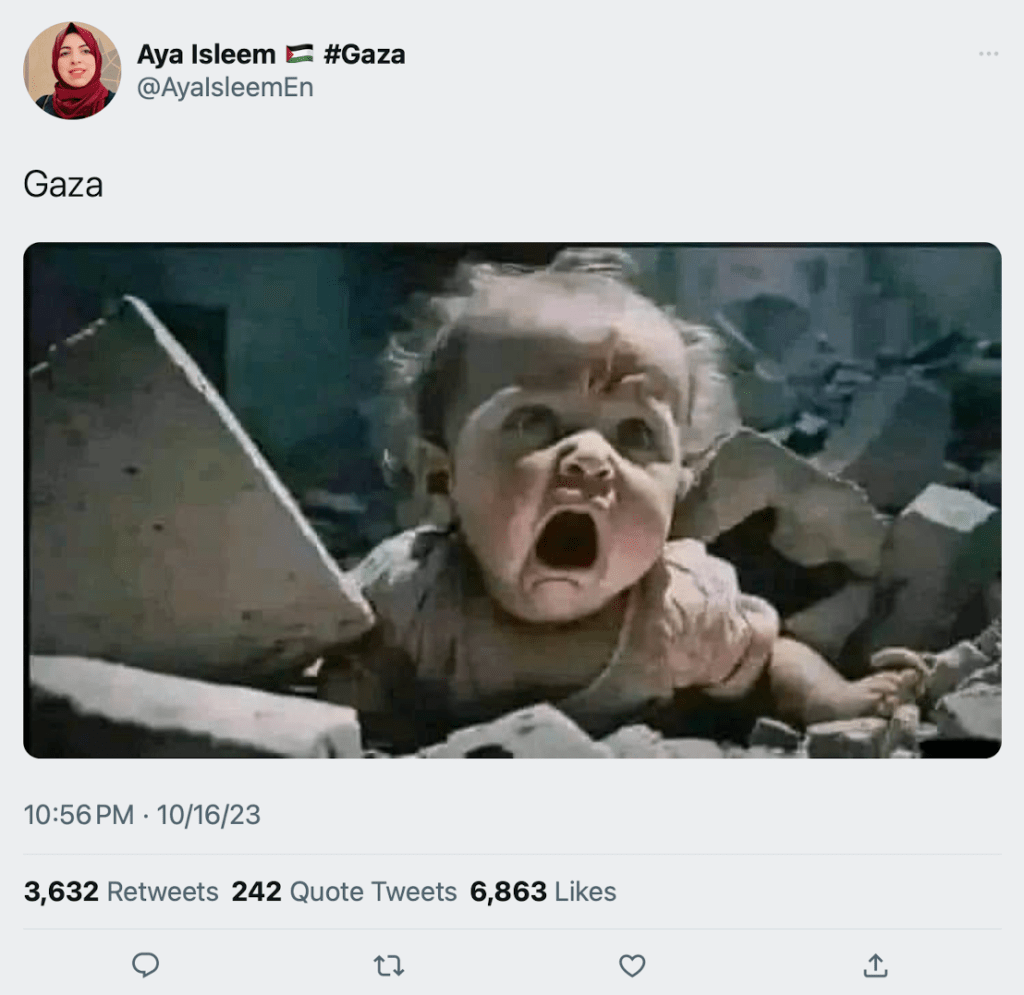

“F]ake images, audio, speeches, and clips are cheap and easy to produce…and malign actors could use AI-generated deepfakes to hack and tarnish our political system,” said Hugo Rifkind, in his article in The Times. The media, however, can still fight against, if not stop, the proliferation of AI and new techs that are used with hurtful intentions.

“Type in a prompt—person X does thing Y in place z—and boom, out it comes,” Rifkind explained. He added that rising techs not only possibly estranged but can completely separate the digital record from its objective reality. Another danger new tech poses is, according to him, the smearing of opponents or the airbrushing of unflattering political reality.

Even if there seems to be no harm when one smoothens his skin or fixes the hair during Zoom calls, Rifkind said that “there still needs to be a safe, working assumption” especially when portraying politics and other realities. “Doubt it once and you doubt it forever,” he warned.

A skeptical attitude comes in handy, especially with young people who are too engrossed in social media nowadays for news and public affairs.

According to Steven Swinford, a political editor of The Times, “young people get most of their news from social media events though they think that it is far less trustworthy than television or newspapers.” He mentioned a poll saying that 40% of young people (aged 18-24) follow the news from social media websites “even though they think they are less trustworthy than TV, news websites, and newspapers.”

Although Gen Zs are trusting social media for news less than the traditional sources, according to a founding partner of Charlesbye, Lee Cain, they still prioritize content over truth.

To combat these risks, Rifkind proposed that media must be the antidote to fakery rather than its direction. “There should be no greater shame for a journalist than spreading a fraud. Indeed, in a world where traditional media must now jostle online alongside anybody else with a smartphone, the defining characteristic of a professional has to be that this is exactly what you never do.”

These fast-improving techs do not only help children in their education or save them during the lockdowns. They also pose threats to untrained minds. For the 14-year-old GSCE student Alexander Browder, “every platform runs on algorithms designed to exploit your interests. An 11-year-old is targeted as much as an adult.”

The 2018 study published by the Centre for Addiction and Mental Health in Canada said that young social media users rate their mental health as “poor”. Most of them are buried in their gadgets for five or more hours per day.

“The bullying and hatred on social media makes children feel diminished. This leads to anxiety, depression, or worse. We can’t turn back the clock—more than half of the world’s population has a social media account—but we can focus on curbing harmful side-effects,” Browder said in The Times.

For him, algorithms should be transparent and people should know what factors affect their online diet to regulate and oversee them accordingly. “Platforms must be accountable for posts that cause self-harm and depression. Social media firms must find them and ban them, along with explicit content targeted at minors. Don’t listen to the tech giants’ excuses that they’re trying their best.”

This is why, in the contemporary world, companies and firms are encouraged not to shy away or be hostile to the media. One must see and appreciate the impact and role of media today.

For Rohan Banerjee of Raconteur, also published in The Times, the media can be partners in presenting what is real, especially for executives and administrations “to convey authenticity, passion, and a genuine knowledge of a product or service.”

“Online harm is currently the domain of technology companies almost exclusively, and so they have much to teach regulators about the reality of confronting it,” according to Noam Schwartz in World Economic Forum. For him, coming together and collaborating with legislators and tech companies rather than “stirring the pot and driving public scrutiny”, the media can help in preventing online harm.