Vhen Jesus Fernandez

Some people prefer real ones more than those that undergo computer programs, especially for photos of prominent individuals or groups, like the royal family.

According to a research professor of computer science and an expert in image manipulation, in NBC News, “People want to see it as ‘this is fake image, this is not.” Professor Maura Grossman cautioned that the wide range of tools for media manipulation can lead to a more complicated view of what might count as real.

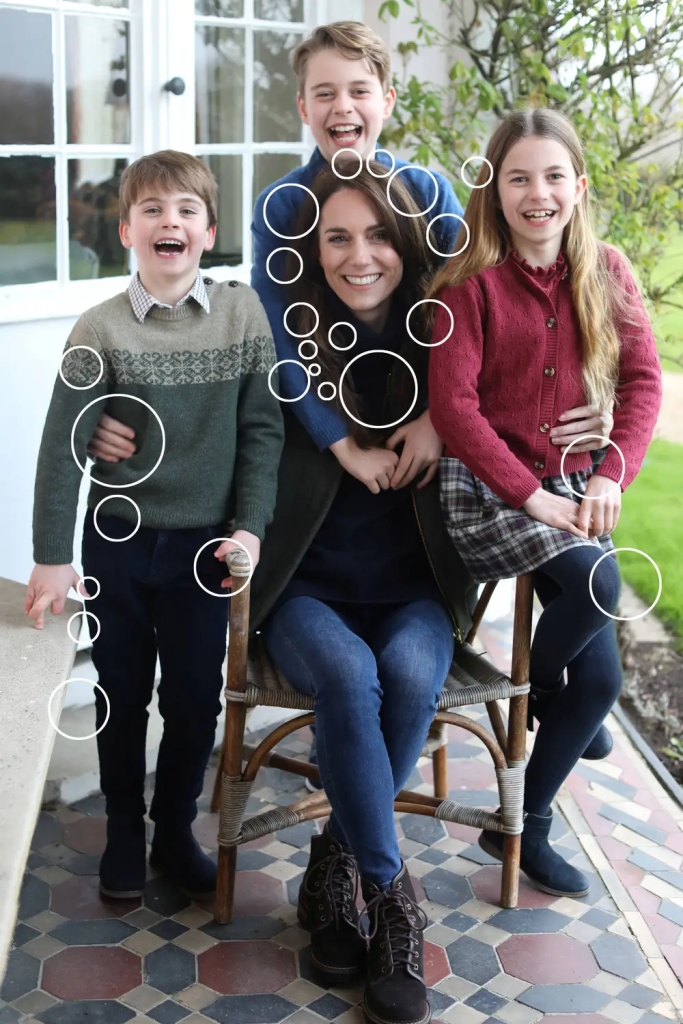

Edited photos or AI-generated content is a major red flag for them. This is made even more evident after a photo of the Princess of Wales with her three children gained ambivalent reactions. The photo, said Kate Mansey in The Times, “was supposed to be a reassuring picture of the princess’s health”. It even obtained the “kill notice” message to remove it from the databases of editors and librarians for having been “manipulated”.

Manipulating photos, especially for royals and prominent people, is not new in their respective industry—at least according to a veteran royal photographer and historian, Ian Lloyd. “I’ve never known picture agencies to refuse royal photographs like this, but in a way, the manipulation of royal images is nothing new.”

Whether edited or not, these tech companies are recently called upon by publishers and content producers to learn to acknowledge the real sources. According to Tom Howard and Katie Prescott in The Times, the Publishers Association, a group of the biggest publishers in the UK, has asked technology companies to “pay to use their content from books, journals, and papers to build their artificial intelligence models.”

The report came after when publishers like Penguin Random House, HarperCollins, and Oxford University Press expressed their “deep concerns” over “vast amounts of copyright-protected works” being used by tech businesses in their AI programs without authorization.

The same association clarified that it has not granted permission to Google’s DeepMind, Facebook’s Meta, and Open-AI’s ChatGPT “for the use of any of their copyright-protected works in relation to, without limitation, the training, development or operation of AI models including large language models or other generative AI.”

Although people ask for the real ones over the edited or manipulated photos, the issue should never be taken seriously. The renowned portrait photographer, Robert Wilson, however, said that editing photos—or manipulating them—”has gone on since photography began” so no one should be shocked at all that the Princess of Wales did the same thing to her image. Chaire Cohen in The Times said the issue, “comes down to a debate between aesthetics—making the image look nicer—and ethics, changing the story. Agnes Venema, an expert in deepfakes, says “[s]uch minor changes aren’t likely to raise concerns when they’re retouched on an average family photo. But this isn’t your average family.”